Wearable Computing (accelerometers, heart monitors, actigraphs)

Wearable computing refers to a new class of measurement devices that can be attached to the human body to measure human activity, biosignals, or environmental factors in real time. Examples of wearable devices are accelerometers, which can measure acceleartion at the hip or wrist, heart monitors, which measure heart rate or systolic blood pressure, and actigraphs, which measure the number of activities per unit of time. These devices can often transmit signals in real time to a central monitoring station. Understanding these signals, their distribution within person and in the population can help understand the association between these new measurements and human health. The big hope here is that devices and new technology can replace the self reports, which are notoriusly unreliable, with silent, reliable, tireless observers.

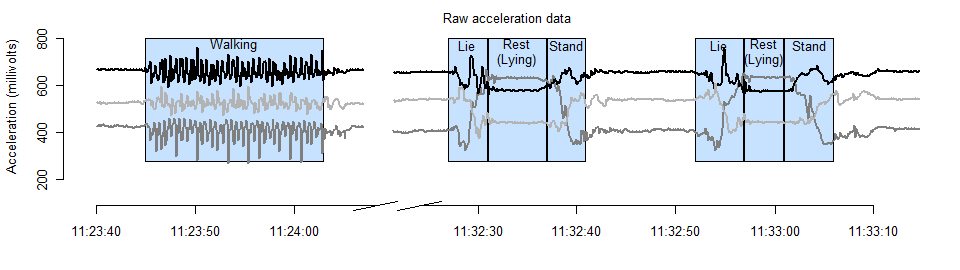

To provide more insight into the type of data that one can record, consider data that are generated using a single accelerometer positioned on the subject’s hip at the apex of the left iliac crest. These data were used in the paper Movelets: A Dictionary of Movement whose lead author is Jiawei Bai. The accelerometer is built on the core chip MMA7260Q by FreescaleTM, and records acceleration in three mutually orthogonal directions for a wide range of sampling frequencies (time points per second) and sensitivities (acceleration per unit of scale). Data were collected during in-laboratory sessions in which subjects performed a collection of activities, including resting, walking, and lying, repeated chair stands, lifting an object from the floor, up-and-go, and standing to reclining on a couch. The Figure above displays two segments of accelerometer data. In the first segment, the subject stands, walks twenty meters, and stands. In the second segment, the subject performs two replicates of lying down and standing up; during each replicate, the subject lies from a standing position, rests for several seconds in the lying position, and rises to a standing position. Three acceleration channels or axes are shown, and activity labels are provided.

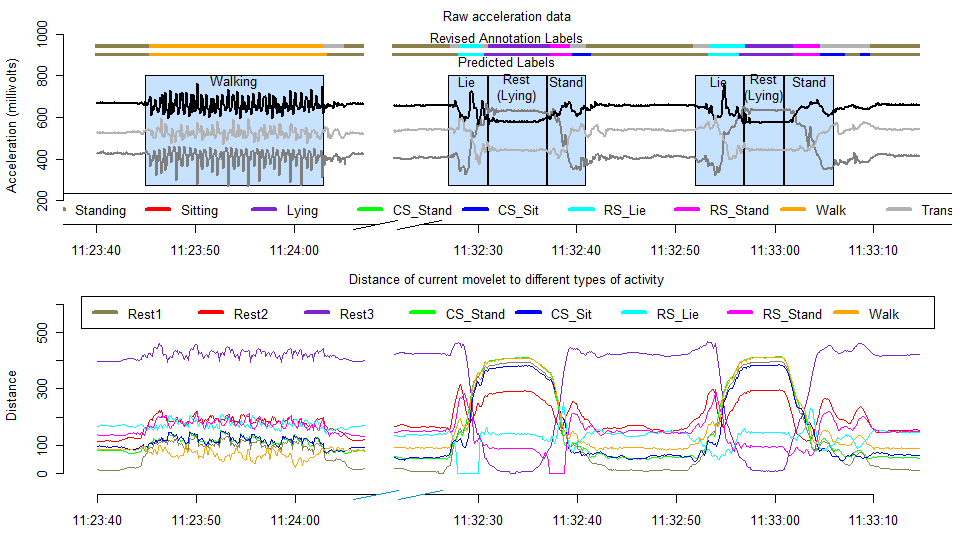

There are many possible problems associated with accelerometers. In this case we were interested in predicting the type of activity given the observed accelerometer time series. A close inspection of the accelerometer time series should build confidence that, at least, the human eye can distinguish between various activity. A fundamental problem was to try to imitate what the human eye can do. Inspired by speech recognitation algorithms, we came up with movelets, which proved to be a very powerful tool for prediction of a wide variety of movement types. Essentially, a movelet is the accelerometry data in a short time interval (1 second in this case). Thus training data (that contains activity labels) is partitioned into overlapping 1 second movelets. Then to a movelet without activity label we simply assigned the activity label of the closest movelet that is labeled. The Figure above is a visual representation of the process. Some The top two bars in the top panel are the observed and estimated activity labels. The lower panel displays the distance from each individual movelet to the closest movelet in each dictionary.

One question that is often asked is: "and how is different from speech recognition?" The fundamental idea is similar, though the signal to noise ratio.